Difference between revisions of "An Overview of the Hyper-V Architecture"

(→Hyper-V Root and Child Partitions) |

m (Text replacement - "<google>BUY_HYPERV</google>" to "<htmlet>hyperv</htmlet>") |

||

| (21 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | This chapter of [[Hyper-V Essentials]] is intended to provide | + | <table border="0" cellspacing="0" width="100%"> |

| + | <tr> | ||

| + | <td width="20%">[[About Hyper-V Essentials|Previous]]<td align="center">[[Hyper-V Essentials|Table of Contents]]<td width="20%" align="right">[[Hyper-V System Requirements|Next]]</td> | ||

| + | <tr> | ||

| + | <td width="20%">About Hyper-V Essentials<td align="center"><td width="20%" align="right">Hyper-V System Requirements</td> | ||

| + | </table> | ||

| + | <hr> | ||

| + | |||

| + | |||

| + | <htmlet>hyperv</htmlet> | ||

| + | |||

| + | |||

| + | This chapter of [[Hyper-V Essentials]] is intended to provide a high level overview of the architecture of Microsoft's Hyper-V server virtualization technology. | ||

| − | == Hyper-V | + | == Hyper-V and Type 1 Virtualization == |

Hyper-V implements what is commonly referred to as ''Type 1 Hypervisor'' virtualization. In this scenario, a hypervisor runs directly on the hardware of the host system and is responsible for sharing the physical hardware resources with multiple virtual machines. This concept is illustrated in the following diagram: | Hyper-V implements what is commonly referred to as ''Type 1 Hypervisor'' virtualization. In this scenario, a hypervisor runs directly on the hardware of the host system and is responsible for sharing the physical hardware resources with multiple virtual machines. This concept is illustrated in the following diagram: | ||

| Line 7: | Line 19: | ||

[[Image:type_1_virtualization.jpg|Type 1 Virtualization with Hypervisor running directly on hardware]] | [[Image:type_1_virtualization.jpg|Type 1 Virtualization with Hypervisor running directly on hardware]] | ||

| − | In basic terms, the primary purpose of the hypervisor is to | + | In basic terms, the primary purpose of the hypervisor is to manage the physical CPU and memory allocation between the various virtual machines running on the host system. |

== Hardware Assisted Virtualization == | == Hardware Assisted Virtualization == | ||

| − | Hyper-V will only run on processors which support hardware assisted virtualization. Before looking at Hyper-V in detail, it is worth providing a brief overview of this hardware assisted virtualization actually means. | + | Hyper-V will only run on processors which support hardware assisted virtualization. Before looking at Hyper-V in detail, it is worth providing a brief overview of what this hardware assisted virtualization actually means. |

The x86 family of CPUs provide a range of protection levels also known as rings in which code can execute. Ring 0 has the highest level privilege and it is in this ring that the operating system kernel normally runs. Code executing in ring 0 is said to be running in system space, kernel mode or supervisor mode. All other code, such as applications running on the operating system, operate in less privileged rings, typically ring 3. | The x86 family of CPUs provide a range of protection levels also known as rings in which code can execute. Ring 0 has the highest level privilege and it is in this ring that the operating system kernel normally runs. Code executing in ring 0 is said to be running in system space, kernel mode or supervisor mode. All other code, such as applications running on the operating system, operate in less privileged rings, typically ring 3. | ||

| − | Under Hyper-V hypervisor virtualization a program known as a hypervisor | + | Under Hyper-V hypervisor virtualization a program known as a hypervisor runs directly on the hardware of the host system in ring 0. The task of this hypervisor is to handle tasks such CPU and memory resource allocation for the virtual machines in addition to providing interfaces for higher level administration and monitoring tools. |

| − | Clearly, if the hypervisor is going to occupy ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory. One solution to this problem is to modify the guest operating systems replacing any privileged operations that will only run in ring 0 of the CPU with calls to the hypervisor (known as hypercalls). The hypervisor in turn performs the task on behalf of the guest system. | + | Clearly, if the hypervisor is going to occupy ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory. One solution to this problem is to modify the guest operating systems, replacing any privileged operations that will only run in ring 0 of the CPU with calls to the hypervisor (known as hypercalls). The hypervisor in turn performs the task on behalf of the guest system. |

Another solution is to leverage the hardware assisted virtualization features of the latest generation of processors from both Intel and AMD. These technologies, known as Intel VT and AMD-V respectively, provide extensions necessary to run unmodified guest virtual machines. In very simplistic terms these new processors provide an additional privilege mode (referred to as ''ring -1'') above ring 0 in which the hypervisor can operate, essentially leaving ring 0 available for unmodified guest operating systems. | Another solution is to leverage the hardware assisted virtualization features of the latest generation of processors from both Intel and AMD. These technologies, known as Intel VT and AMD-V respectively, provide extensions necessary to run unmodified guest virtual machines. In very simplistic terms these new processors provide an additional privilege mode (referred to as ''ring -1'') above ring 0 in which the hypervisor can operate, essentially leaving ring 0 available for unmodified guest operating systems. | ||

| Line 29: | Line 41: | ||

| − | The root partition runs a copy of Windows Server 2008 | + | The root partition is essentially a virtual machine which runs a copy of 64-bit Windows Server 2008 which, in turn, acts as a host for a number of special Hyper-V components. The root partition is responsible for providing the device drivers for the virtual machines running in the child partitions, managing the child partition lifecycles, power management and event logging. The root partition operating system also hosts the ''Virtualization Stack'' which is responsible for performing a wide range of virtualization functions (the Virtualization Stack and other root partition components will be covered in more detail later in this chapter). |

Child partitions host the virtual machines in which the guest operating systems run. Hyper-V supports both ''Hyper-V Aware'' (also referred to as ''enlightened'') and ''Hyper-V Unaware'' guest operating systems. | Child partitions host the virtual machines in which the guest operating systems run. Hyper-V supports both ''Hyper-V Aware'' (also referred to as ''enlightened'') and ''Hyper-V Unaware'' guest operating systems. | ||

| − | == The Hyper-V Virtualization Stack | + | == The Virtualization Stack and Other Root Partition Components == |

| + | |||

| + | As previously noted, the root partition contains the ''Virtualization Stack''. This is a collection of components that provide a large amount of the Hyper-V functionality. The following diagram provides an abstract outline of the stack: | ||

| + | |||

| + | [[Image:hyper-v_virtualization_stack.jpg|The Hyper-V Virtualization Stack]] | ||

| + | |||

| + | The following table provides an overview of each of the virtual stack components: | ||

<table border="1" cellpadding="5" cellspacing="0" id="E3B" style="border-collapse: collapse; border-color:#cccccc; border-style: solid; border-width: 1px; margin-bottom:20px"> | <table border="1" cellpadding="5" cellspacing="0" id="E3B" style="border-collapse: collapse; border-color:#cccccc; border-style: solid; border-width: 1px; margin-bottom:20px"> | ||

| Line 46: | Line 64: | ||

<tr> | <tr> | ||

<td> | <td> | ||

| − | Virtual Machine Management Service (VMM Service) | + | '''Virtual Machine Management Service (VMM Service)''' |

</td> | </td> | ||

<td> | <td> | ||

| − | Manages the state of virtual machines running in the child partitions (active, offline, stopped etc) and controls the tasks that can be performed on a virtual machine based on current state (such as taking snapshots). Also manages | + | Manages the state of virtual machines running in the child partitions (active, offline, stopped etc) and controls the tasks that can be performed on a virtual machine based on current state (such as taking snapshots). Also manages the addition and removal of devices. When a virtual machine is started, the VMM Service is also responsible for creating a corresponding ''Virtual Machine Worker Process''. |

</td> | </td> | ||

</tr> | </tr> | ||

<tr bgcolor="#e9e9e6"> | <tr bgcolor="#e9e9e6"> | ||

<td> | <td> | ||

| − | Virtual Machine Worker Process | + | '''Virtual Machine Worker Process''' |

</td> | </td> | ||

<td> | <td> | ||

| − | Virtual Machine Worker Processes are started by the VMM Service when virtual machines are started. A Virtual Machine Worker Process (named vmwp.exe) is created for each Hyper-V virtual machine and is responsible much of the management level interaction between the parent partition Windows Server 2008 system and the virtual machines in the child partitions. The duties of the Virtual Machine Worker Process include creating, configuring, running, pausing, resuming, saving, restoring and snapshotting the associated virtual machine. It also handles IRQs, memory and I/O port mapping through a ''Virtual Motherboard'' (VMB). | + | Virtual Machine Worker Processes are started by the VMM Service when virtual machines are started. A Virtual Machine Worker Process (named vmwp.exe) is created for each Hyper-V virtual machine and is responsible for much of the management level interaction between the parent partition Windows Server 2008 system and the virtual machines in the child partitions. The duties of the Virtual Machine Worker Process include creating, configuring, running, pausing, resuming, saving, restoring and snapshotting the associated virtual machine. It also handles IRQs, memory and I/O port mapping through a ''Virtual Motherboard'' (VMB). |

</td> | </td> | ||

</tr> | </tr> | ||

<tr> | <tr> | ||

<td> | <td> | ||

| − | Virtual Devices | + | '''Virtual Devices''' |

</td> | </td> | ||

<td> | <td> | ||

| Line 70: | Line 88: | ||

<tr bgcolor="#e9e9e6"> | <tr bgcolor="#e9e9e6"> | ||

<td> | <td> | ||

| − | Virtual Infrastructure Driver | + | '''Virtual Infrastructure Driver''' |

</td> | </td> | ||

<td> | <td> | ||

| − | Operates in kernel mode (i.e in the privileged CPU ring) and provides partition, memory and processor management for the virtual machines running in the child partitions. The Virtual Infrastructure Driver (Vid.sys) also provides the conduit for the components higher up the Virtualization Stack to communicate with the hypervisor. | + | Operates in kernel mode (i.e. in the privileged CPU ring) and provides partition, memory and processor management for the virtual machines running in the child partitions. The Virtual Infrastructure Driver (Vid.sys) also provides the conduit for the components higher up the Virtualization Stack to communicate with the hypervisor. |

</td> | </td> | ||

</tr> | </tr> | ||

<tr> | <tr> | ||

<td> | <td> | ||

| − | Windows Hypervisor Interface Library | + | '''Windows Hypervisor Interface Library''' |

</td> | </td> | ||

<td> | <td> | ||

| Line 86: | Line 104: | ||

<tr bgcolor="#e9e9e6"> | <tr bgcolor="#e9e9e6"> | ||

<td> | <td> | ||

| − | VMBus | + | '''VMBus''' |

</td> | </td> | ||

<td> | <td> | ||

| Line 94: | Line 112: | ||

<tr> | <tr> | ||

<td> | <td> | ||

| − | Virtualization Service Providers | + | '''Virtualization Service Providers''' |

</td> | </td> | ||

<td> | <td> | ||

| Line 102: | Line 120: | ||

<tr bgcolor="#e9e9e6"> | <tr bgcolor="#e9e9e6"> | ||

<td> | <td> | ||

| − | Virtualization Service Clients | + | '''Virtualization Service Clients''' |

</td> | </td> | ||

<td> | <td> | ||

| Line 109: | Line 127: | ||

</tr> | </tr> | ||

</table> | </table> | ||

| + | |||

| + | In addition to the components contained within the virtualization stack, the root partition also contains the following components: | ||

| + | |||

| + | <table border="1" cellpadding="5" cellspacing="0" id="E3B" style="border-collapse: collapse; border-color:#cccccc; border-style: solid; border-width: 1px; margin-bottom:20px"> | ||

| + | <tr bgcolor="#cccccc" style="color:black" valign="top"> | ||

| + | <th> | ||

| + | <p>Component</p> | ||

| + | </th> | ||

| + | <th> | ||

| + | <p>Description</p> | ||

| + | </th> | ||

| + | </tr> | ||

| + | <tr bgcolor="#e9e9e6"> | ||

| + | <td> | ||

| + | '''VMBus''' | ||

| + | </td> | ||

| + | <td> | ||

| + | Part of Hyper-V Integration Services, the VMBus facilitates highly optimized communication between child partitions and the parent partition. | ||

| + | </td> | ||

| + | </tr> | ||

| + | <tr> | ||

| + | <td> | ||

| + | '''Virtualization Service Providers''' | ||

| + | </td> | ||

| + | <td> | ||

| + | Resides in the parent partition and provides synthetic device support via the VMBus to Virtual Service Clients (VSCs) running in child partitions. | ||

| + | </td> | ||

| + | </tr> | ||

| + | <tr bgcolor="#e9e9e6"> | ||

| + | <td> | ||

| + | '''Virtualization Service Clients''' | ||

| + | </td> | ||

| + | <td> | ||

| + | Virtualization Service Clients are synthetic device instances that reside in child partitions. They communicate with the VSPs in the parent partition over the VMBus to fulfill the child partition's device access requests. | ||

| + | </td> | ||

| + | </tr> | ||

| + | </table> | ||

| + | |||

| + | == Hyper-V Guest Operating System Types == | ||

| + | |||

| + | Hyper-V supports a number of different types of guest operating systems running in child partitions. These are ''Hyper-V Aware Windows Operating Systems'', ''Hyper-V Aware non-Windows Operating Systems'' and ''Non Hyper-V Aware Operating Systems''. | ||

| + | |||

| + | - '''Hyper-V Aware Windows Operating Systems''' - Hyper-V aware Windows operating systems (also referred to as ''enlightened'' operating systems) are able to detect that they are running on the Hyper-V hypervisor and modify behavior to maximize performance (such as using hypercalls to directly call the hypervisor). In addition, these operating systems are able to host the Integration Services to perform such tasks as running Virtual Service Clients (VSCs) which communicate over the VMBus with the Virtual Service Providers (VSPs) running on the root partition for device access. | ||

| + | |||

| + | - '''Hyper-V Aware Non-Windows Operating Systems''' - Non-Windows Hyper-V aware operating systems are also able to run Integration Services and, through the use of VSCs supplied by third parties, access devices via the root partition VSPs. The enlightened operating systems are also able to modify behavior to optimize performance and communicate directly with the hypervisor using hypercalls. | ||

| + | |||

| + | - '''Non Hyper-V Aware Operating Systems''' - These operating systems are unaware that they are running on a hypervisor and are unable to run the Integration Services. To support these operating systems, the Hyper-V hypervisor uses emulation to provide access to device and CPU resources. Whilst this approach allows unmodified, unenlightened operating systems to function within Hyper-V virtual machines, the overheads inherent in the emulation process can be significant. | ||

| + | |||

| + | |||

| + | <htmlet>hyperv</htmlet> | ||

Latest revision as of 18:41, 29 May 2016

| Previous | Table of Contents | Next |

| About Hyper-V Essentials | Hyper-V System Requirements |

This chapter of Hyper-V Essentials is intended to provide a high level overview of the architecture of Microsoft's Hyper-V server virtualization technology.

Hyper-V and Type 1 Virtualization

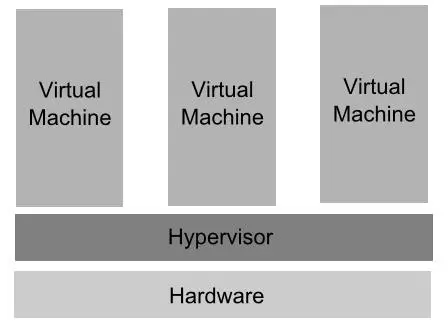

Hyper-V implements what is commonly referred to as Type 1 Hypervisor virtualization. In this scenario, a hypervisor runs directly on the hardware of the host system and is responsible for sharing the physical hardware resources with multiple virtual machines. This concept is illustrated in the following diagram:

In basic terms, the primary purpose of the hypervisor is to manage the physical CPU and memory allocation between the various virtual machines running on the host system.

Hardware Assisted Virtualization

Hyper-V will only run on processors which support hardware assisted virtualization. Before looking at Hyper-V in detail, it is worth providing a brief overview of what this hardware assisted virtualization actually means.

The x86 family of CPUs provide a range of protection levels also known as rings in which code can execute. Ring 0 has the highest level privilege and it is in this ring that the operating system kernel normally runs. Code executing in ring 0 is said to be running in system space, kernel mode or supervisor mode. All other code, such as applications running on the operating system, operate in less privileged rings, typically ring 3.

Under Hyper-V hypervisor virtualization a program known as a hypervisor runs directly on the hardware of the host system in ring 0. The task of this hypervisor is to handle tasks such CPU and memory resource allocation for the virtual machines in addition to providing interfaces for higher level administration and monitoring tools.

Clearly, if the hypervisor is going to occupy ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory. One solution to this problem is to modify the guest operating systems, replacing any privileged operations that will only run in ring 0 of the CPU with calls to the hypervisor (known as hypercalls). The hypervisor in turn performs the task on behalf of the guest system.

Another solution is to leverage the hardware assisted virtualization features of the latest generation of processors from both Intel and AMD. These technologies, known as Intel VT and AMD-V respectively, provide extensions necessary to run unmodified guest virtual machines. In very simplistic terms these new processors provide an additional privilege mode (referred to as ring -1) above ring 0 in which the hypervisor can operate, essentially leaving ring 0 available for unmodified guest operating systems.

Hyper-V Root and Child Partitions

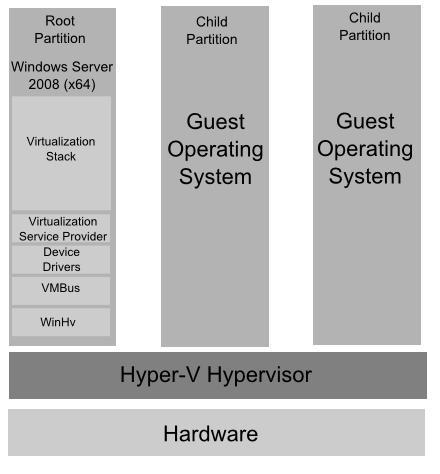

Running on top of the hypervisor are a root partition (also known as a parent partition) and zero or more child partitions (one for each virtual machine) as illustrated below:

The root partition is essentially a virtual machine which runs a copy of 64-bit Windows Server 2008 which, in turn, acts as a host for a number of special Hyper-V components. The root partition is responsible for providing the device drivers for the virtual machines running in the child partitions, managing the child partition lifecycles, power management and event logging. The root partition operating system also hosts the Virtualization Stack which is responsible for performing a wide range of virtualization functions (the Virtualization Stack and other root partition components will be covered in more detail later in this chapter).

Child partitions host the virtual machines in which the guest operating systems run. Hyper-V supports both Hyper-V Aware (also referred to as enlightened) and Hyper-V Unaware guest operating systems.

The Virtualization Stack and Other Root Partition Components

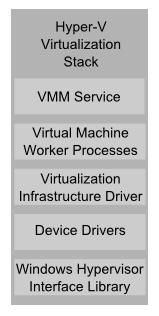

As previously noted, the root partition contains the Virtualization Stack. This is a collection of components that provide a large amount of the Hyper-V functionality. The following diagram provides an abstract outline of the stack:

The following table provides an overview of each of the virtual stack components:

|

Component |

Description |

|---|---|

|

Virtual Machine Management Service (VMM Service) |

Manages the state of virtual machines running in the child partitions (active, offline, stopped etc) and controls the tasks that can be performed on a virtual machine based on current state (such as taking snapshots). Also manages the addition and removal of devices. When a virtual machine is started, the VMM Service is also responsible for creating a corresponding Virtual Machine Worker Process. |

|

Virtual Machine Worker Process |

Virtual Machine Worker Processes are started by the VMM Service when virtual machines are started. A Virtual Machine Worker Process (named vmwp.exe) is created for each Hyper-V virtual machine and is responsible for much of the management level interaction between the parent partition Windows Server 2008 system and the virtual machines in the child partitions. The duties of the Virtual Machine Worker Process include creating, configuring, running, pausing, resuming, saving, restoring and snapshotting the associated virtual machine. It also handles IRQs, memory and I/O port mapping through a Virtual Motherboard (VMB). |

|

Virtual Devices |

Virtual Devices are managed by the Virtual Motherboard (VMB). Virtual Motherboards are contained within the Virtual Machine Worker Processes, of which there is one for each virtual machine. Virtual Devices fall into two categories, Core VDevs and Plug-in VDevs. Core VDevs can either be Emulated Devices or Synthetic Devices. |

|

Virtual Infrastructure Driver |

Operates in kernel mode (i.e. in the privileged CPU ring) and provides partition, memory and processor management for the virtual machines running in the child partitions. The Virtual Infrastructure Driver (Vid.sys) also provides the conduit for the components higher up the Virtualization Stack to communicate with the hypervisor. |

|

Windows Hypervisor Interface Library |

A DLL (named WinHv.sys) located in the parent partition Windows Server 2008 instance and any guest operating systems which are Hyper-V aware (in other words modified specifically to operate in a Hyper-V child partition). Allows the operating system's drivers to access the hypervisor using standard Windows API calls instead of hypercalls. |

|

VMBus |

Part of Hyper-V Integration Services, the VMBus facilitates highly optimized communication between child partitions and the parent partition. |

|

Virtualization Service Providers |

Resides in the parent partition and provides synthetic device support via the VMBus to Virtual Service Clients (VSCs) running in child partitions. |

|

Virtualization Service Clients |

Virtualization Service Clients are synthetic device instances that reside in child partitions. They communicate with the VSPs in the parent partition over the VMBus to fulfill the child partition's device access requests. |

In addition to the components contained within the virtualization stack, the root partition also contains the following components:

|

Component |

Description |

|---|---|

|

VMBus |

Part of Hyper-V Integration Services, the VMBus facilitates highly optimized communication between child partitions and the parent partition. |

|

Virtualization Service Providers |

Resides in the parent partition and provides synthetic device support via the VMBus to Virtual Service Clients (VSCs) running in child partitions. |

|

Virtualization Service Clients |

Virtualization Service Clients are synthetic device instances that reside in child partitions. They communicate with the VSPs in the parent partition over the VMBus to fulfill the child partition's device access requests. |

Hyper-V Guest Operating System Types

Hyper-V supports a number of different types of guest operating systems running in child partitions. These are Hyper-V Aware Windows Operating Systems, Hyper-V Aware non-Windows Operating Systems and Non Hyper-V Aware Operating Systems.

- Hyper-V Aware Windows Operating Systems - Hyper-V aware Windows operating systems (also referred to as enlightened operating systems) are able to detect that they are running on the Hyper-V hypervisor and modify behavior to maximize performance (such as using hypercalls to directly call the hypervisor). In addition, these operating systems are able to host the Integration Services to perform such tasks as running Virtual Service Clients (VSCs) which communicate over the VMBus with the Virtual Service Providers (VSPs) running on the root partition for device access.

- Hyper-V Aware Non-Windows Operating Systems - Non-Windows Hyper-V aware operating systems are also able to run Integration Services and, through the use of VSCs supplied by third parties, access devices via the root partition VSPs. The enlightened operating systems are also able to modify behavior to optimize performance and communicate directly with the hypervisor using hypercalls.

- Non Hyper-V Aware Operating Systems - These operating systems are unaware that they are running on a hypervisor and are unable to run the Integration Services. To support these operating systems, the Hyper-V hypervisor uses emulation to provide access to device and CPU resources. Whilst this approach allows unmodified, unenlightened operating systems to function within Hyper-V virtual machines, the overheads inherent in the emulation process can be significant.